Scrap France 2, France 3, and TF1 Tv news to analyse humanity's biggest challenge : fossil energies and climate change and analyse the data on a website.

- TF1 : https://www.tf1info.fr/emission/le-20h-11001/extraits/

- France 2 : https://www.francetvinfo.fr/replay-jt/france-2/20-heures/jt-de-20h-du-jeudi-30-decembre-2021_4876025.html

- France 3 : https://www.francetvinfo.fr/replay-jt/france-3/19-20/jt-de-19-20-du-vendredi-15-avril-2022_5045866.html

- JSON ➡️ https://github.com/polomarcus/television-news-analyser/tree/main/data-news-json/

- CSV or actually Tab Separated Values (TSV) compressed (if you don't know how to uncompressed these data) ➡️️ https://github.com/polomarcus/television-news-analyser/tree/main/data-news-csv/

JSON data can be stored inside Postgres and displayed on a Metabase dashboard (read "Run" on this readme), or can be found on this website :

- docker compose

- Optional: if you want to code you have to use Scala build tool (SBT)

# with docker compose - no need of sbt

./init-stack-with-data.sh

# this script does this : docker-compose -f src/test/docker/docker-compose.yml up -d --build app

After you ran the project with docker compose, you can check metabase here http://localhost:3000 with a few steps :

- configure an account

- configure PostgreSQL data source: (user/password - host : postgres - database name : metabase) (see docker-compose for details)

- You're good to go : "Ask a simple question", then select your data source and the "Aa_News" table

Some examples are inside example.ipynb, but I preferred to use Metabase dashboard and visualisation using SQL

sbt "runMain com.github.polomarcus.main.TelevisionNewsAnalyser 3"

sbt "runMain com.github.polomarcus.main.SaveTVNewsToPostgres"

sbt "runMain com.github.polomarcus.main.UpdateNews"

Last replays France 2, 3 and TF1 are scrapped with a GitHub Action, then this news are stored inside this folder partitioned by media and by date.

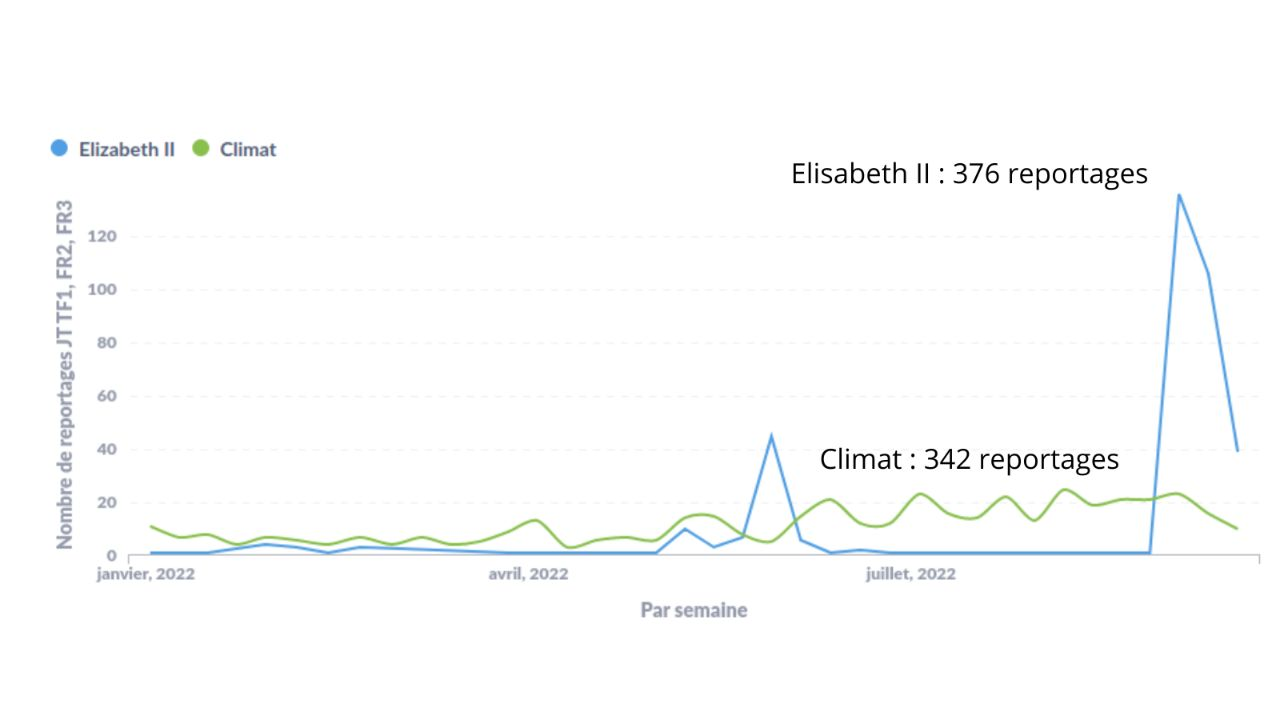

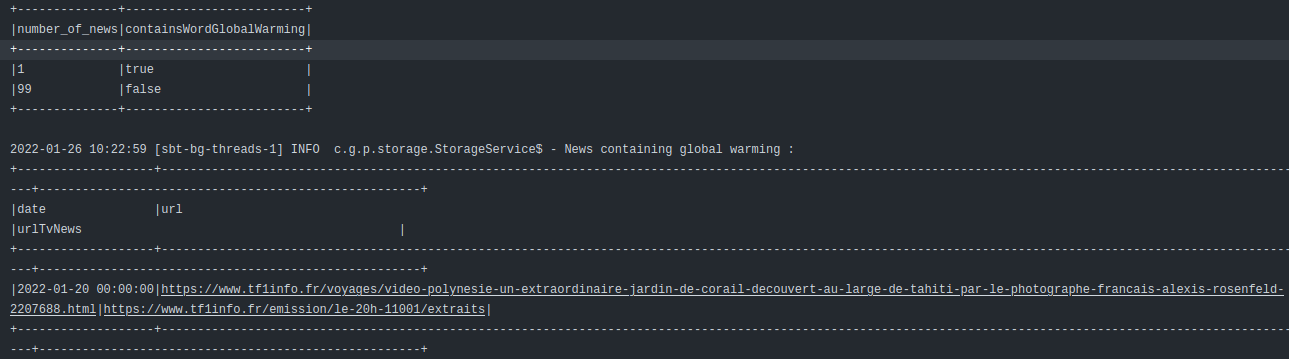

If news title or description contains a "global warming key word" : they are marked as such with containsWordGlobalWarming: Boolean.

Some results can be found on this repo's website : https://polomarcus.github.io/television-news-analyser/ | https://observatoire.climatmedias.org/

- Click here : https://github.com/polomarcus/television-news-analyser/actions/workflows/save-data.yml

- Click on the last workflow ran called "Get news from websites", then on "click-here-to-see-data"

- Click on "List France 2 news urls containing global warming (see end)" to see France 2's urls

- Click on "List TF1 news urls containing global warming (see end)" to see TF1's urls :

Checkout the project website locally (https://observatoire.climatmedias.org/)

Go to http://localhost:8080

The source are inside the docs folder

# first, be sure to have docker compose up with ./init-stack-with-data.sh

sbt test # it will parsed some localhost pages from test/resources/

sbt> testOnly ParserTest -- -z parseFranceTelevisionHome