🔗Markdown format - Examples

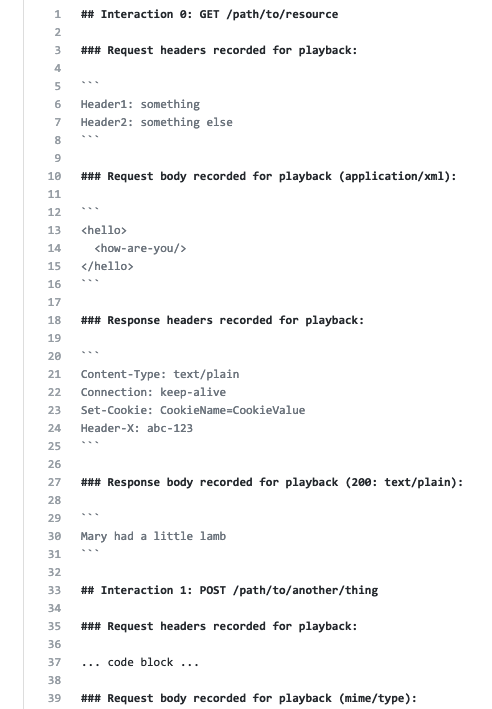

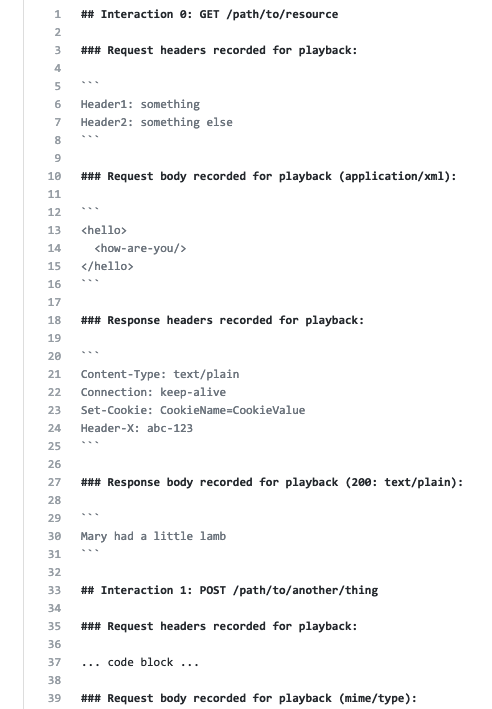

Here's a screen-shot of some raw markdown source:

(non-screenshot actual source: https://raw.githubusercontent.com/servirtium/README/master/example1.md)

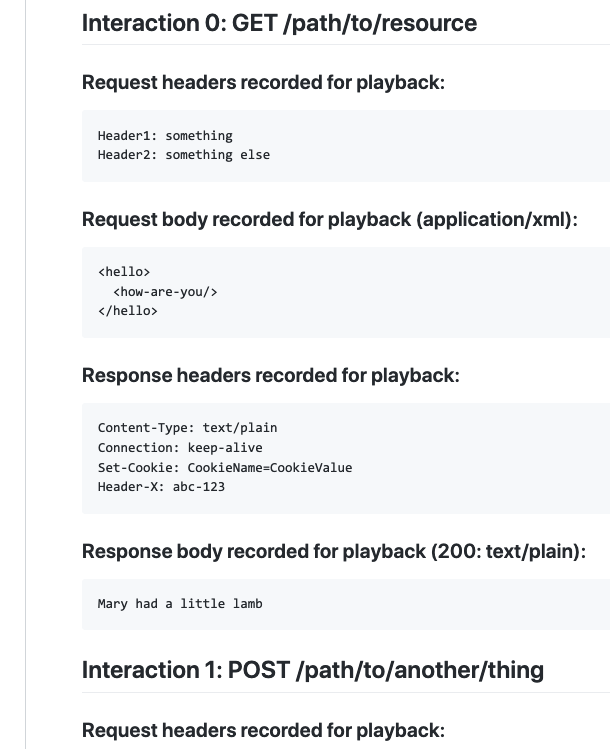

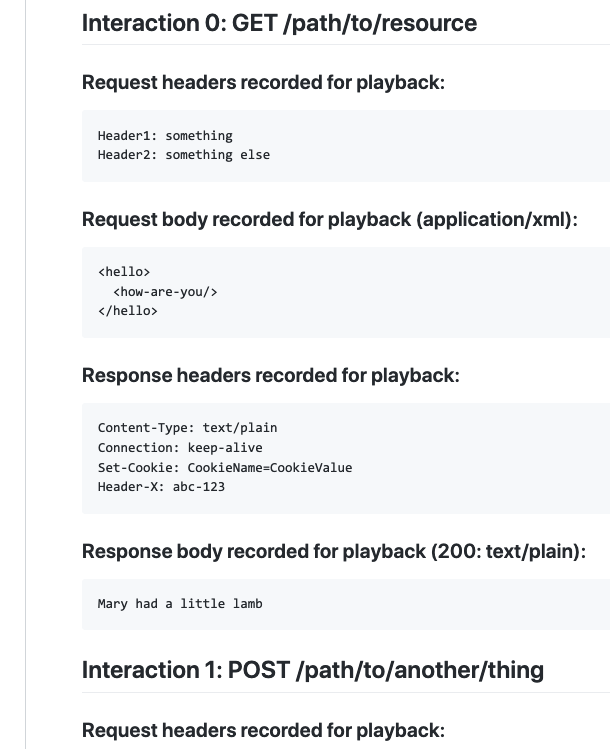

Here's what GitHub (for one) renders that like:

That's the whole point of this format that's human-inspectable in raw form and that your code-portal renders in a pretty way too. If your code portal is GitHub, then 'pretty' is true.

(non-screenshot actual rendered file: https://github.com/servirtium/README/blob/master/example1.md)

... and you'd be storing that VCS as you would your automated tests.

🔗 Markdown Syntax Explained

🔗 Multiple Interactions Catered For

- Each interaction is denoted via a Level 2 Markdown Heading. e.g.

## Interaction N: <METHOD> <PATH-FROM-ROOT> N starts as 0, and goes up depending on how many interactions there were in the conversation. <METHOD> is GET or POST (or any standard HTML or non standard method/verb name). <PATH-FROM-ROOT> is the path without the domain & port. e.g. /card/addTo.doIt

🔗 Request And Reply Details Per Interaction

Each interaction has four sections denoted by a *Level 3 Markdown headers

- The request headers going from the client to the HTTP server, denoted with a heading like so

### Request headers recorded for playback: - The request body going from the client to the HTTP server (if applicable - GET does not use this), denoted with a heading like so

### Request body recorded for playback (<MIME-TYPE>):. And <MIME-TYPE> is something like application/json - The response headers coming back from the HTTP server to the client, denoted with a heading like so

### Response headers recorded for playback: - The response body coming back from the HTTP server to the client (some HTTP methods do not use this), denoted with a heading like so

### Response body recorded for playback (<STATUS-CODE>: <MIME-TYPE>):

Within each of those there is a single Markdown code block (three back-ticks) with the details of each. The lines in that block may be reformatted depending on the settings of the recorder. If binary, then there is a Base64 sequence instead (admittedly not so pretty on the eye).

🔗 Recording and Playback

🔗 Recording a HTTP conversation

You'll write your test (say JUnit) and that will use a library (that your company may have written or be from a vendor). For recording you will swap the real service URL for one running a Servirtium middle-man server (which itself will delegate to the real service). If that service is flaky - keep re-running the test manually until the service is non-flaky, and commit that Servirtium-style markdown to source-control. Best practice is to configure the same test to have two modes of operation: 'direct' and 'recording' modes. This is not a caching idea - it is deliberate - you are explicitly recording while running a test, or not recording while running a test (and doing direct to the service)

Anyway, the recording ends up in the markdown described in a text file on your file system - which you'll commit to VCS alongside your tests.

🔗 Playback of HTTP conversations

Those same markdown recordings are used in playback. Again an explicit mode - you're running in this mode and it will fail if there are no recordings in the dir/file in source control.

Playback itself will fail if the headers/body sent by the client to the real service (through the Servirtium library) are not the same they were when the recording was made. It is possible that masking/redacting and general manipulations should happen deliberately during the recording to get rid of transient aspects that are not helpful in playback situations. The test failing in this situation is deliberate - you're using this to guard against potential incompatibilities.

For example any dates in headers of the body that go from the client to the HTTP Server could be swapped for some date in the future like "2099-01-01" or a date in the past "1970-01-01".

The person who's designing the tests that recording or playback would work on the redactions/masking towards an "always passing" outcome, with no differences in the markdown regardless of the number of time the same test is re-recorded.

Note: How a difference in request-header or request-body expectation is logged in the test output needs to be part of the deliberate design of the tests themselves. This is easier said than done, and you can't catch assertion failures over HTTP.

Note2: this is a third mode of operation for the same test as in "Recording a HTTP conversation" above - "playback" mode meaning you have three modes of operation all in all.