-

Notifications

You must be signed in to change notification settings - Fork 149

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

validation acc in the pretrain phase in pytorch #34

Comments

|

That model is trained using exactly the same code in the GitHub repository. Please provide me with more information so that I might give you further suggestions. E.g., how you process the dataset, and what is your PyTorch version. |

|

You should not add May I know what GPU you’re using? |

|

my torch version is 1.3.1 and data preprocess is same to you |

|

my gpu is gtx 2080, 8g |

|

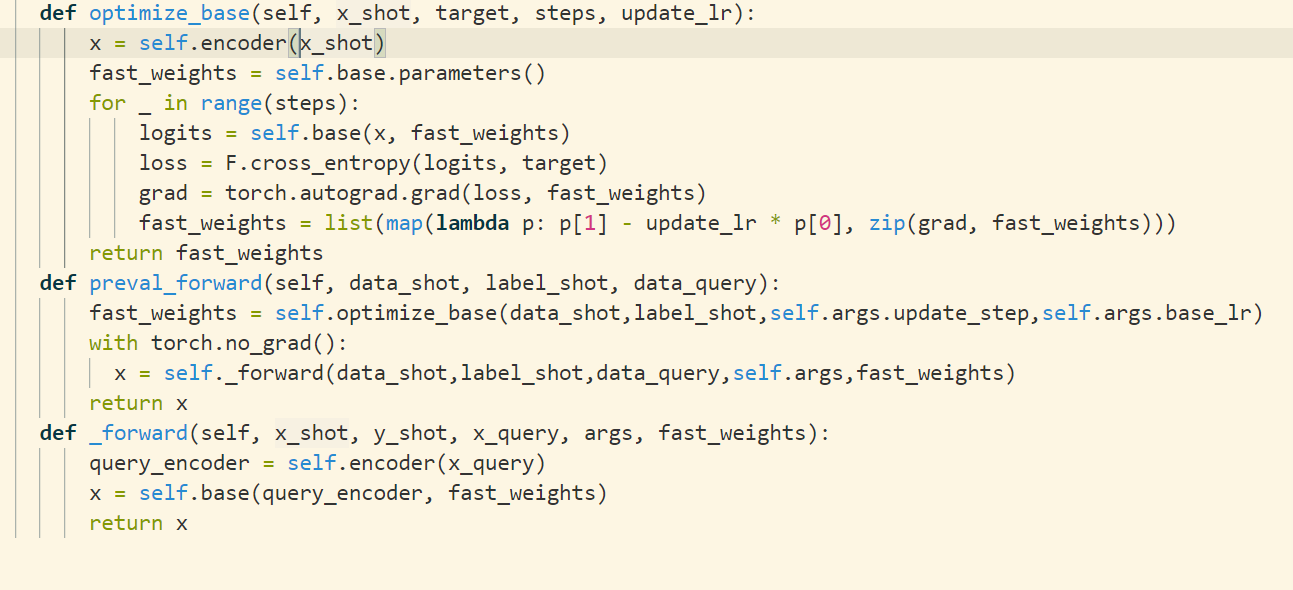

In your screenshot, you use a function named Other parts of your code look correct. If you cannot use meta validation during the pre-training phase, you may use a normal validation for 64 classes instead. You may also try the pre-training code in DeepEMD and FEAT. We're using the same pre-training strategy. |

|

oh self.base is baselearner in your code. I will rerun this code once more. And try other ways. Thank you! |

|

It seems your change is correct. I am not sure what makes your pre-training accuracy lower than excepted. It should be around 60% for meta validation after pre-training. I'll check the related code to find if there is any bug. I also suggest you run exactly the same code using our config (PyTorch 0.4.0) if it is possible. You may also try the other two methods I mentioned. They all provide the pre-training code. |

|

When I use rtx2080 run your code in torch 0.4.0, there were some bugs in the baselearner. |

|

Thanks for reporting this issue. |

I renumber you supported your best pretrained model in a issue. And its validation acc is 64%. I want to modify your backbone. However, the best val acc in my pretrain phase is 41%. And I rerun your pretrain code. I found the best val acc is 48%. So, did you have some tricks when you pretrained the model?

The text was updated successfully, but these errors were encountered: